Project Aeon - Modular local LLM system

2025-ongoing

About the Project

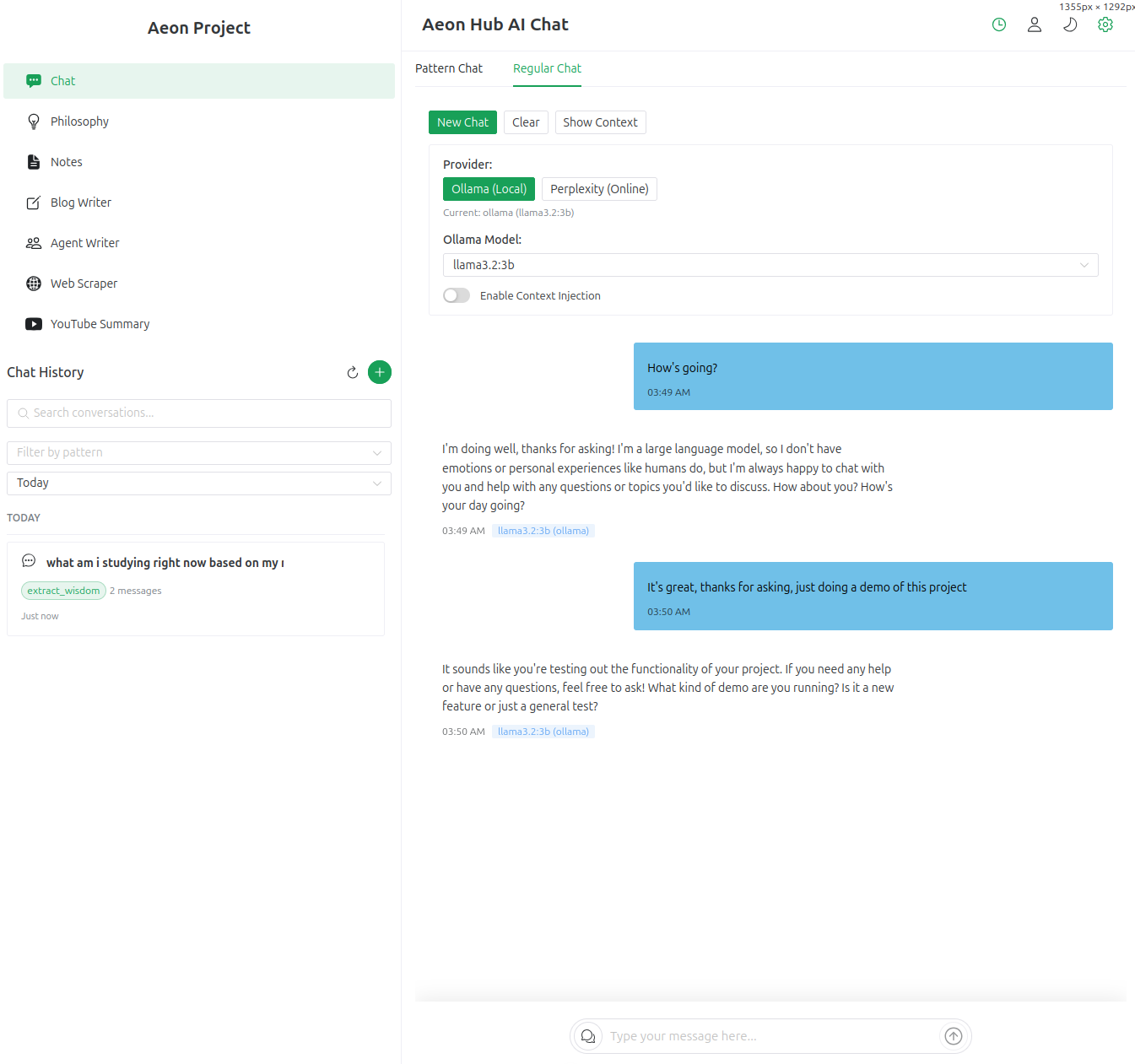

My personal AI assistant that runs entirely offline. No data leaves your machine. Uses FastAPI, ChromaDB for semantic search, and Vue 3 for the UI. Integrated with Ollama for local LLMs. Still building it.

Key Learnings

- Vector databases and semantic search (RAG architecture)

- FastAPI and modern Python backend patterns

- Vue 3 Composition API with TypeScript

- Local LLM deployment and optimization

- Privacy-preserving AI system architecture

- Integration of multiple AI frameworks

Tech Stack

FastAPIChromaDBVue 3TypeScriptNaive UIFabric AIOllamasentence-transformers